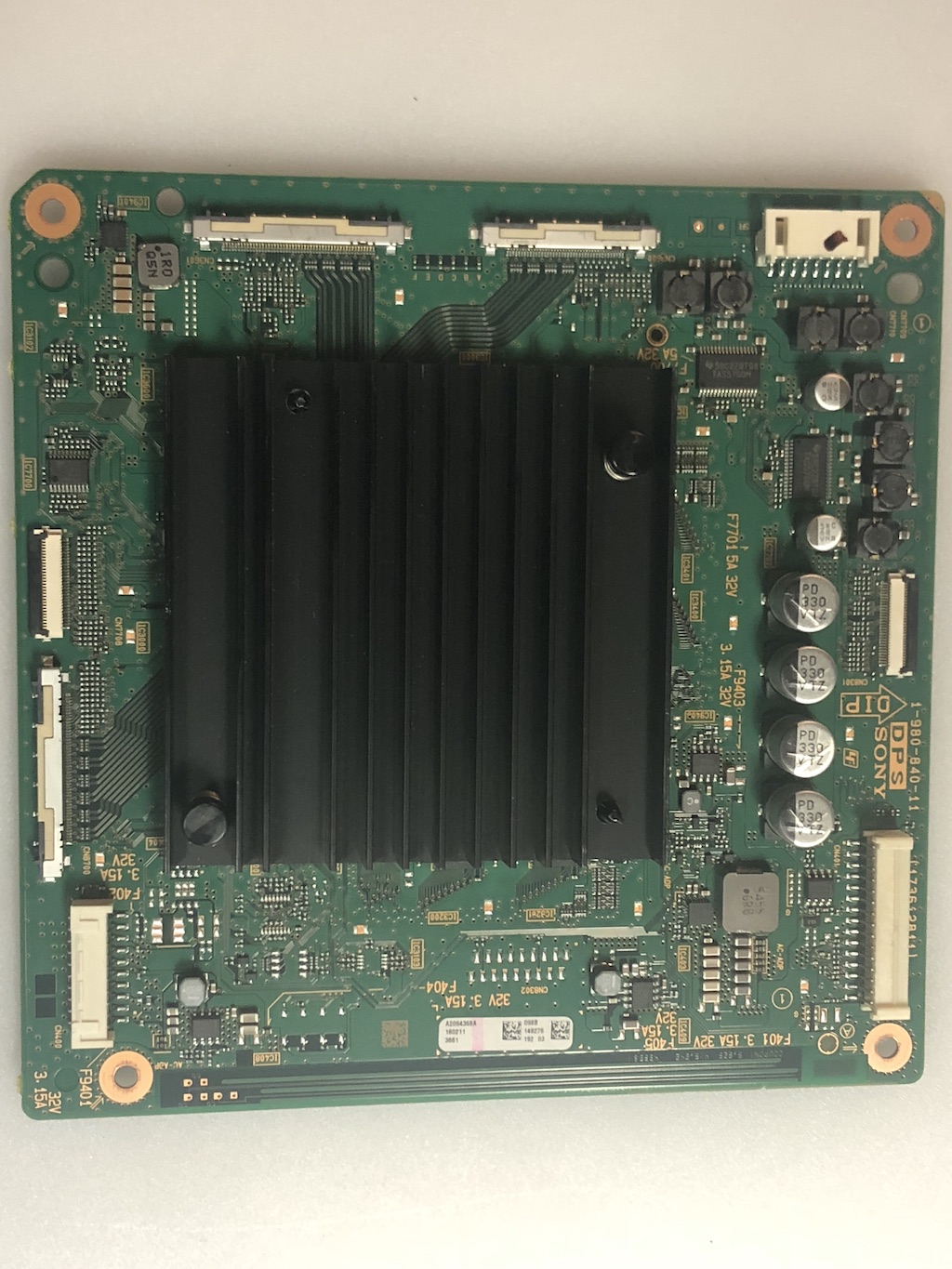

Now, by leveraging Sony's technologies such as back-illuminated pixel structure, stacked configurations and Cu-Cu connections *2 cultivated in the development of CMOS image sensors, and achieving the SPAD pixels and distance measuring processing circuitry on a single chip, Sony has succeeded in developing a compact yet high-resolution sensor. It is possible to accomplish long-distance, high-precision distance measuring by employing SPAD as the detector in a dToF sensor, which measures the distance to an object based on the time of flight (time difference) of a light emitted from a light source until it returns to the sensor, after being reflected by the object. SPAD is a pixel structure that uses avalanche multiplication to amplify electrons from a single incident photon, causing a cascade like an avalanche, and it can detect even weak light. This trend is being driven by the popularization of advanced driver assistance systems (ADAS) and the need for this technology in autonomous driving (AD). In addition to sensing devices such as cameras and millimeter wave radar, LiDAR is becoming ever more important as a method of high-precision detection and recognition of not only road conditions but also the location and shape of objects such as vehicles and pedestrians.

*1 This achievement was announced at the International Solid-State Circuits Conference (ISSCC), which opened on February 13, 2021. Tokyo, Japan - Sony Corporation announced today that it has developed a stacked direct Time of Flight (dToF) depth sensor for automotive LiDAR using single-photon avalanche diode (SPAD) pixels, an industry first.

0 kommentar(er)

0 kommentar(er)